Late last year, Google announced the Android Developer Challenge, a contest for Android developers to show off new experiences made possible by on-device Machine Learning. Since then, tons of developers have submitted their ideas and been hard at work developing their apps. Today, the winners of the challenge have been announced!

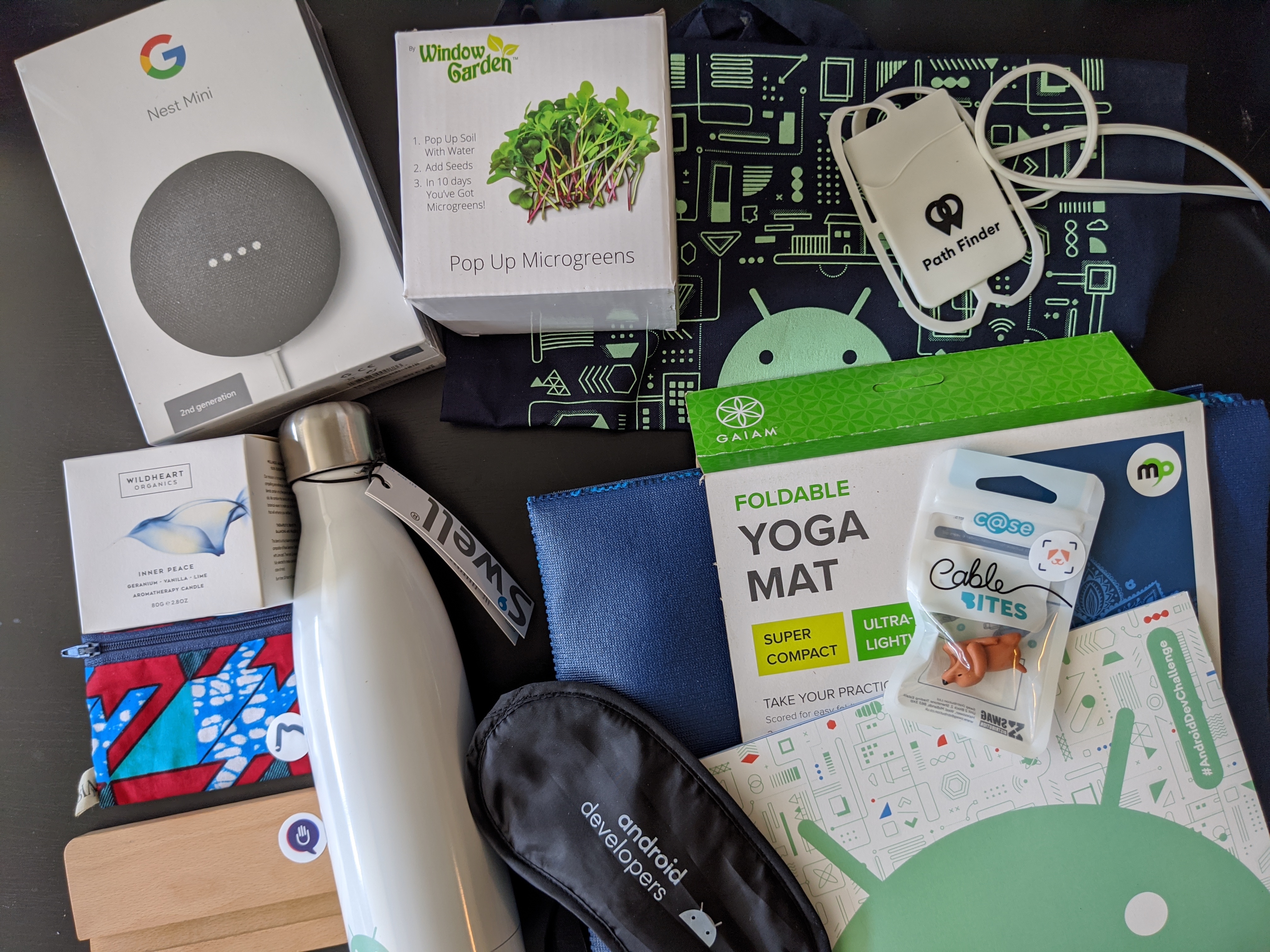

I was lucky to get access to a cool trial box that Google sent out, complete with little goodies to try out some of the apps from the winners! Here are some obligatory unboxing photos:

After checking out the cool loot, I downloaded the winning apps to check them out, and wanted to show off some of my favorites.

Trashly

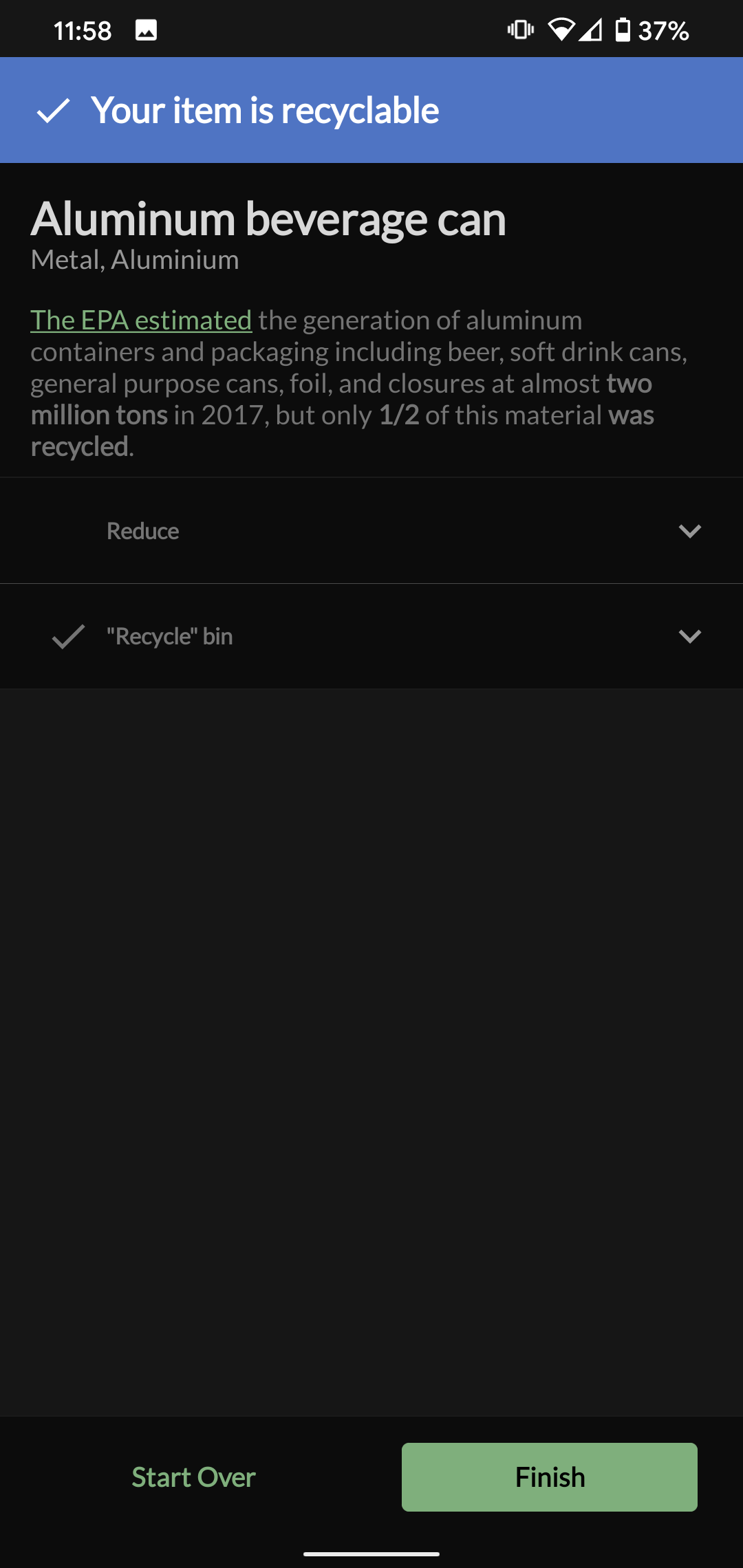

The first app I tried was Trashly. The goal of this app is to make recycling easier by providing up-to-date information about where and how to recycle your items. You can type in any item that you’re interested in recycling, but what’s cooler (and relevant to the challenge) is that you can use the camera to detect an object and find out 1) if the item is recyclable, and 2) where you can go to recycle it. I tried this with a can of soda, which was instantly recognized:

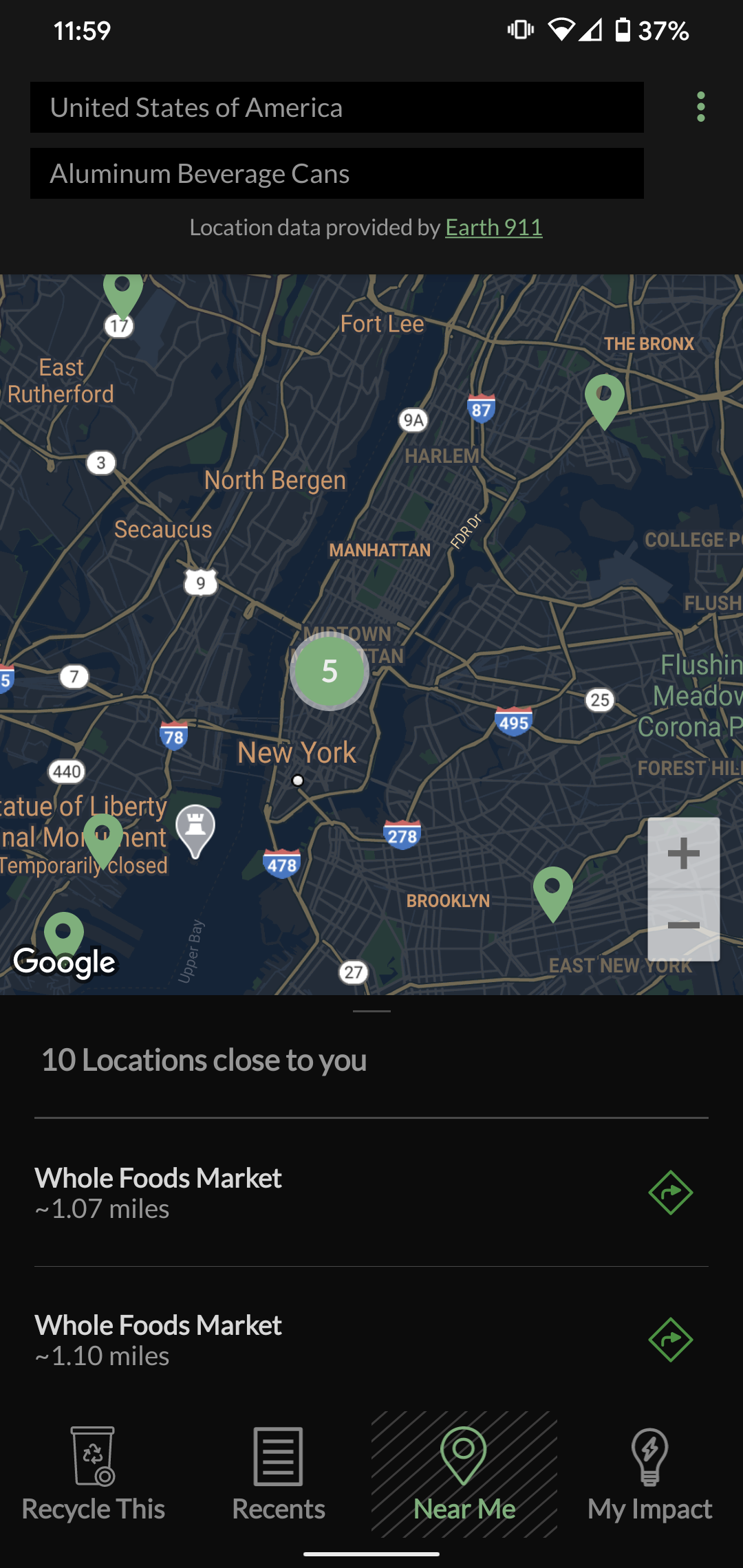

And was given a map of nearby places that I could take my can to recycle. Very cool and useful!

Leepi

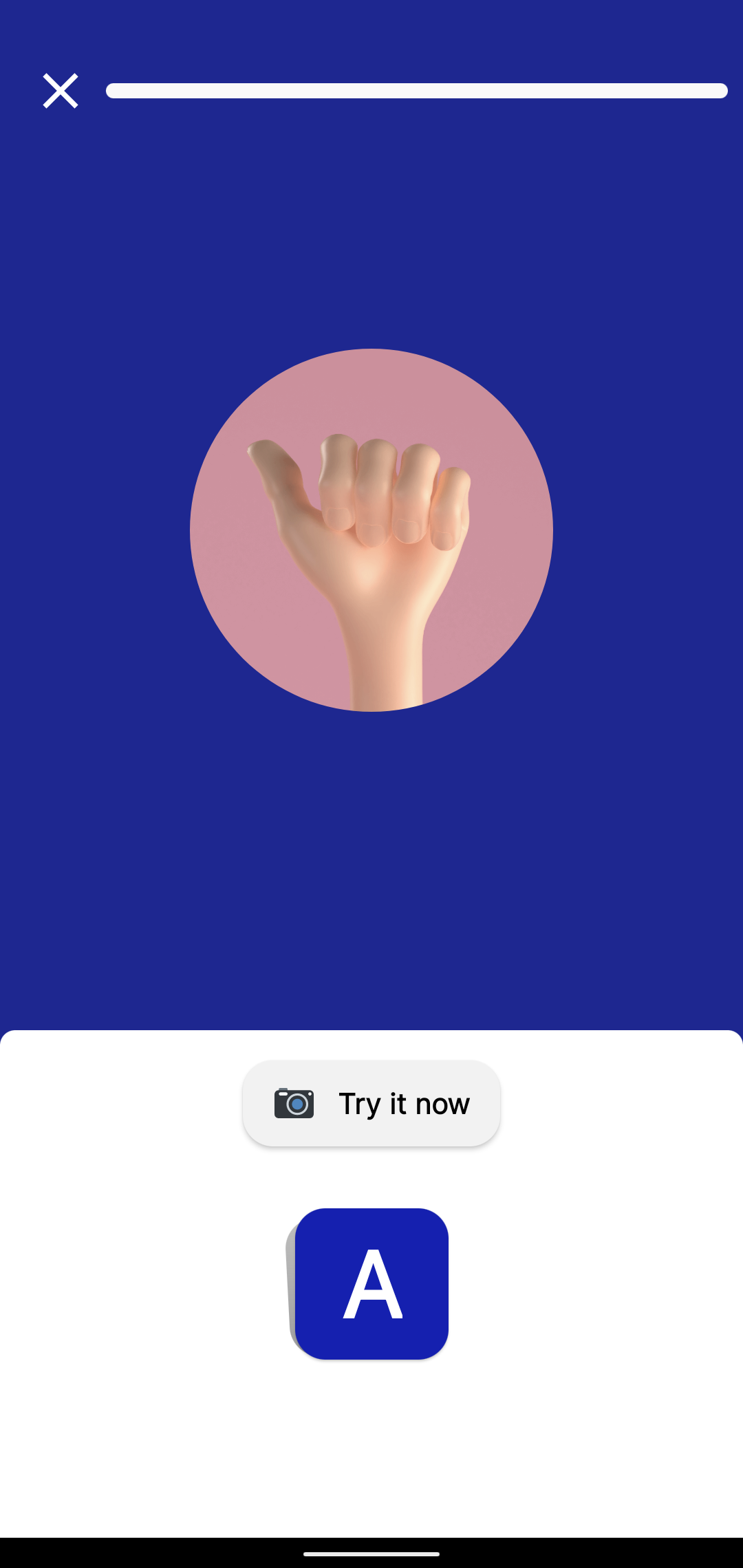

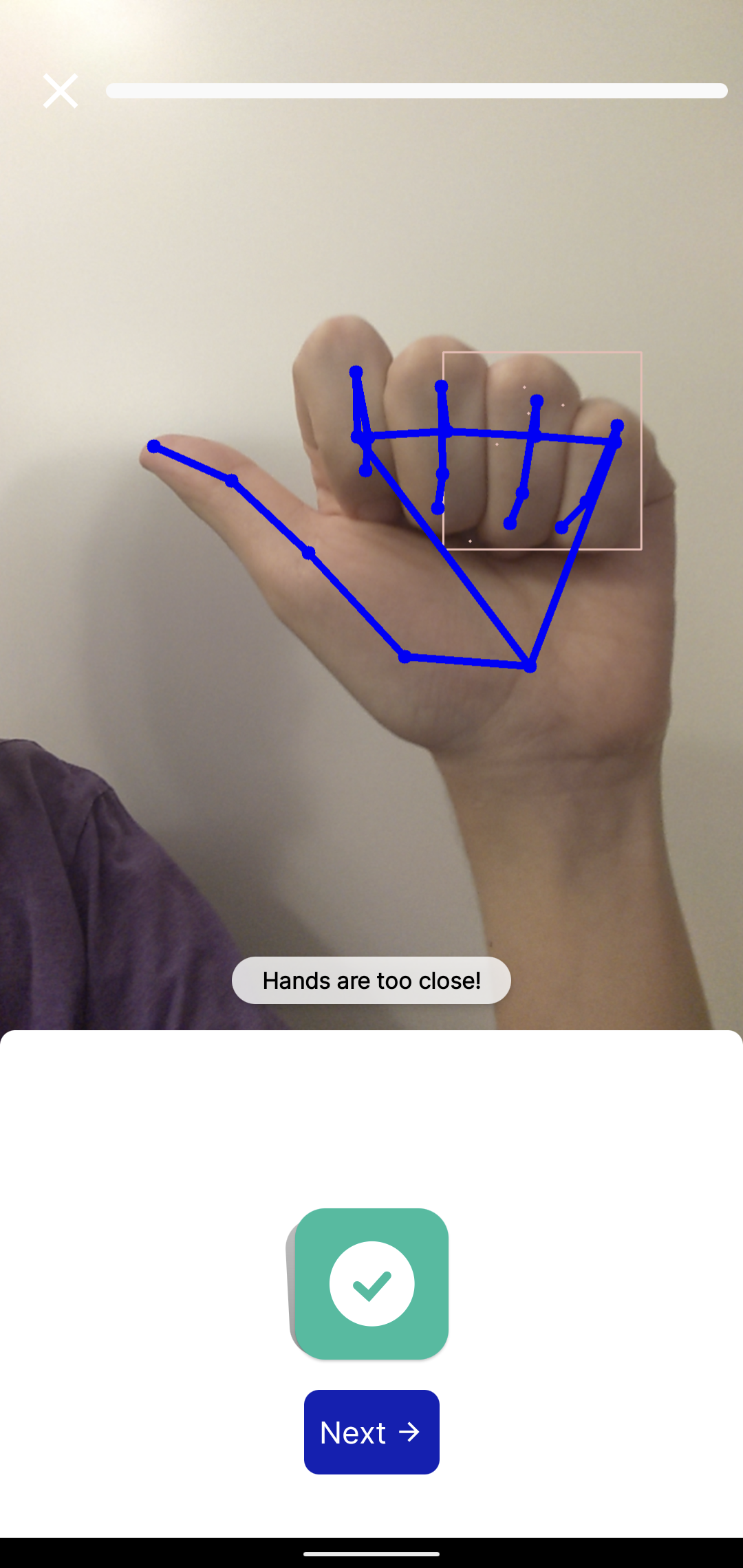

The next app that I tried was Leepi. It’s a fun, educational app to help users learn American Sign Language. I personally had never learned Sign Language, so this was a really cool way to start! It uses the camera and on-device machine learning to interpret the user’s hand positions to verify that they are doing the hand positions and gestures correctly.

Path Finder

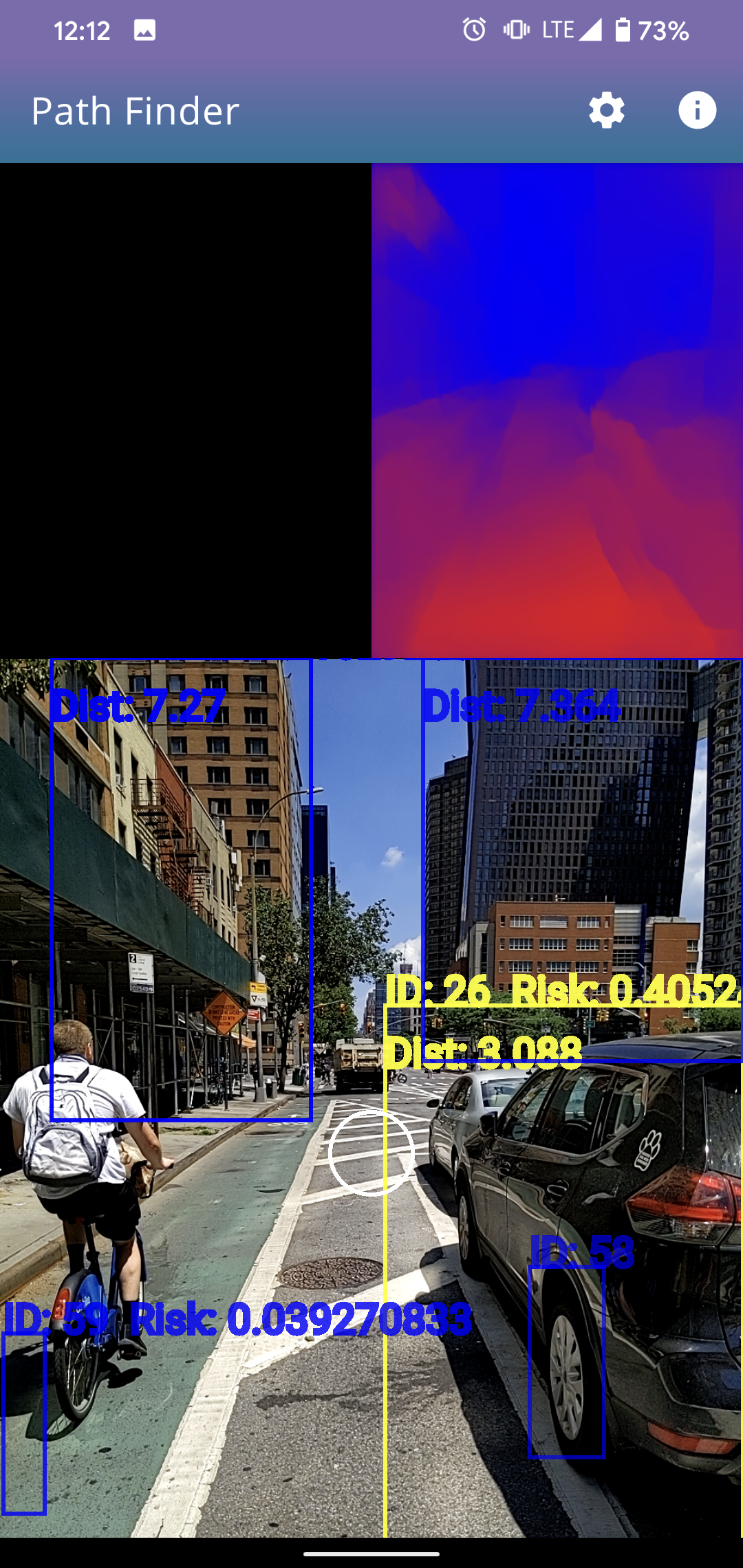

The last app I wanted to talk about was called Path Finder. The gist of this app is to use the camera and machine learning to build a heatmap of obstacles that might be problematic for visually impaired people in public environments. I tried the app out on the streets of New York City and have some screenshots of the results below. I am not sure how useful this would be in practice, but it certainly looks interesting. I would be curious to hear feedback from someone who is visually impaired to hear their thoughts on the presentation format.

If you’re interested in seeing the very cool and interesting things you can do with on-device machine learning, I definitely encourage you to check out these and the rest of the winning apps. And if you’re an author of one of these applications, congratulations on a job well done!